As consultants at GoDataDriven we get ample opportunity to experiment with new technology, for example at the monthly

Hack-What-You-Want Friday, on which we get an full day to try, hack or study anything we like. For bigger projects we

have the GoDataDriven Moonshots. Recently another opportunity was added to the

mix: Deep Learning Initiatives, during which we get five days to spend on a project that implements deep learning.

The plan

While deep learning is very interesting on its own, of course it is much more interesting when combined with flying

objects. In this project we set out with a dream: to build a flying greeter for our beautiful Wibautstraat office.

And because drones (quadcopters) are too commonplace nowadays, we decided to try to make an autonomous blimp!

Finding a blimp

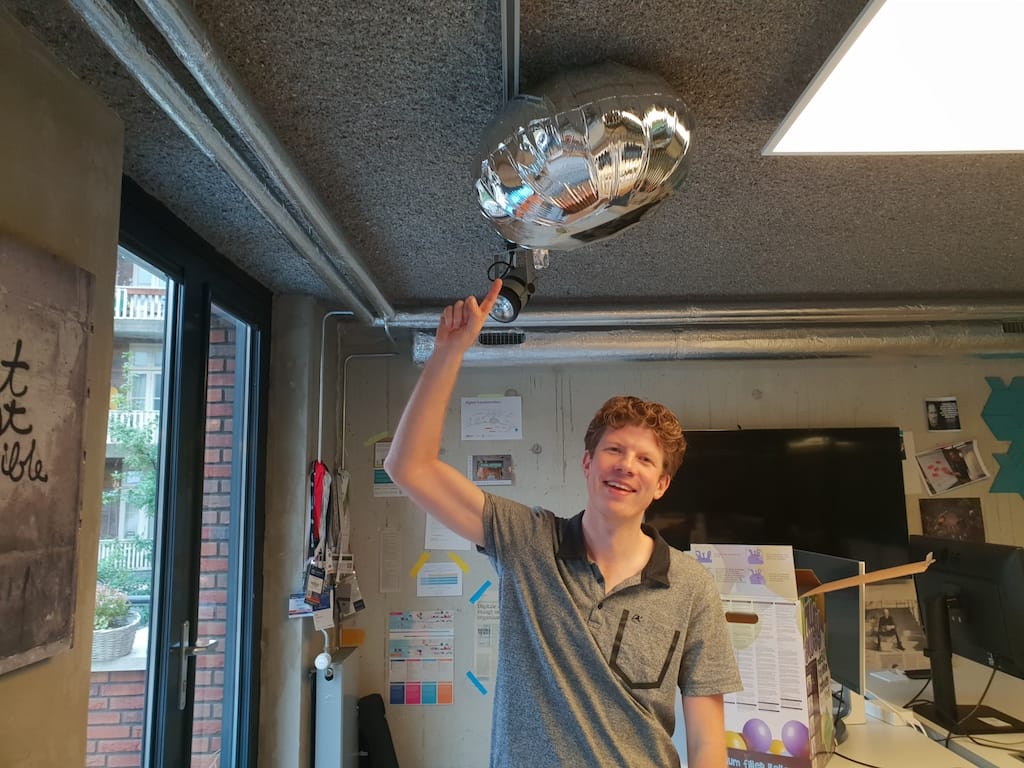

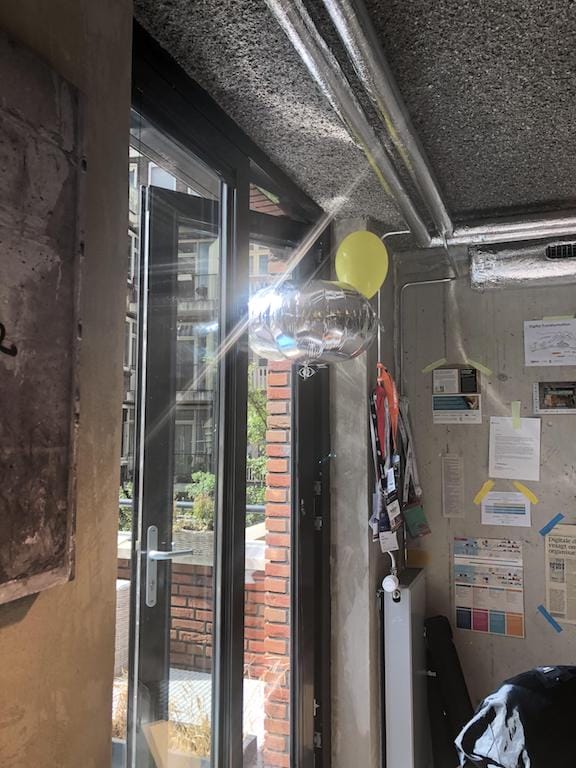

Building a blimp from scratch is cool, but also takes a lot of time. As we were mainly interested in machine learning, we decided to order a blimp package that includes a balloon, controller and propellers. Of course we also needed some helium. Inflating a blimp is literally a breeze. Such joy to see your first blimp stick to the roof!

The package contained some counterweights to neutralize the blimp’s buoyancy: very convenient. And it came

with a smartphone app to control it. Such a cool toy! But of course we didn’t buy it to fly it ourselves.

Adding brains

The next step was to give the blimp some brains, in the form of a camera and computing power. Unfortunately our blimp

can carry at most 15g, or about 10 large paperclips. There aren’t many cameras that weigh as little as 15g, especially

when they also require an external battery!

So, attaching a camera to the blimp was not going to cut it. Instead we opted for a setup with a stationary camera

attached to a Raspberry Pi. Of course, the Pi is not the most suited to heavy computation, but it is very easy to

move around. We installed a Flask webserver which serves images on demand, and set up one of our laptops, a Macbook Pro,

a client which requests the image and applies some deep learning.

Using a stationary camera came with a very large benefit: instead of having to recognize

‘objects’ in the blimp’s field of vision, we just had to recognize the blimp itself. And while a blimp has

a very well defined shape, the objects in our office that a blimp could bump into come in many shapes and sizes.

Detecting a blimp

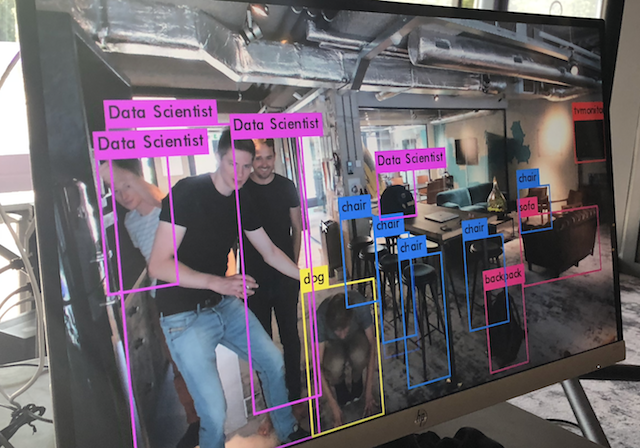

So the first thing we tried was our setup of YOLO, an

object recognition model linked to a webcam. It is trained to recognize a plethora of things: chairs, tables, plants,

data scientists, the like.

So, when we turned it on and held the blimp in front of the camera we of course expected it to recognize the blimp. Indeed the model recognized our blimp, but to our suprise preferred calling it a ‘handbag’.

Modifying YOLO to correctly recognize our blimp is complicated and time-consuming. Thus our next step was to look for another way to identify a blimp on an image and get an estimate on its position.

We found exactly what we were looking for on Waleed Abdullah’s blog:

a pre-trained model which recognizes balloons! And of course, a blimp is a kind of balloon, right? Unfortunately,

it turns out that a metallic blimp actually looks quite a bit different than a colorful balloon. Nevertheless the

model did recognize it most of the time. A complicating factor here was that the ventilation in our office has the

same metallic surface as the blimp: when the blimp hovered in front of the ventilation it would melt into the background.

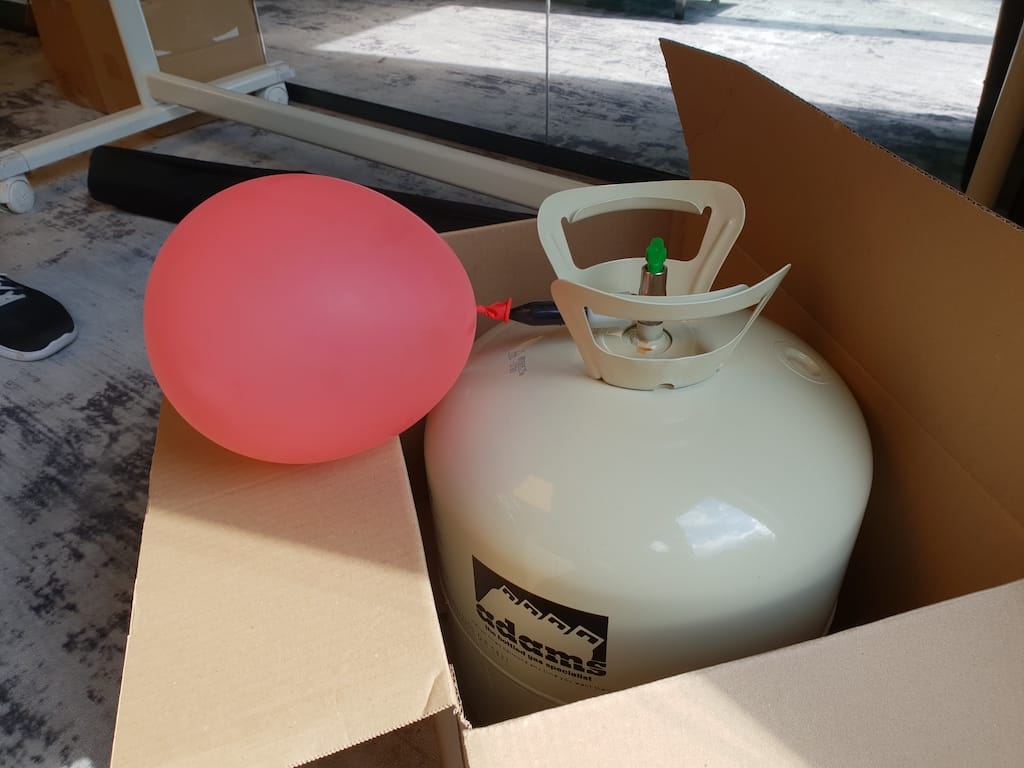

We need more balloons

In the end we came up with a simple solution to our problem: attach a real balloon to the blimp, and use

the model to recognize that balloon. So we headed to the supermarket, where we did some comparative research to find the lightest balloons available.

Simply inflating a balloon is not sufficient: they are so heavy! Instead our additional balloon needed some helium as well.

To our great joy we found our model was able to detect the secondary balloon quite effectively. However, it wasn’t very fast..

It took about 8 seconds to process one image! The blimp would have to move really slow to establish a useful feedback loop at this rate.

Enter the GPU

It is never a bad thing to discover you need some extra horse power. Fortunately, our office has exactly this:

This beauty connected to a NUC makes a great server.

It can classify about 3 images per second thus producing a nice, smooth stream of feedback.

As long as the blimp moves within the view of the camera, we should be able to derive its position.

If the blimp appears on the top part of the screen, its vertical coordinate must be somewhat large. Similarly,

the blimp’s horizontal position is found by considering if it appears on the left or the right of the screen. This leaves

the depth, which is somewhat more tricky: it needs to be inferred from the apparent size of the blimp.

If the blimp is far away from the camera, it appears smaller.

In principle the camera ‘sees’ in spherical coordinates, and the maths for translating these coordinates to normal

cartesian coordinates is fairly simple. But before you can do that, you need to convert position on camera to actual

spherical coordinates – and this depends on the angle-of-view of your lens. We calibrated this conversion by holding

the blimp in a fixed position and comparing the camera readout and the actual position.

Next up: controlling the blimp.

Hacking the bluetooth controller

The blimp can be controlled remotely over bluetooth through a smartphone app. Unfortunately, no public api was available

and there was no clear way to communicate with it. Instead we decided to take our chances and try one of the Python

bluetooth connector libraries (bluepy) available. After some fiddling we managed to connect to the controller. Inspecting

the connection, we found about 10 different ‘characteristics’: registries/variables that hold a value between 0 and 128.

Together the values in the characteristics determine the output behaviour of the motor.

As these characteristics don’t have meaningful names and no instructions were available, we set out the only useful thing

left: write to the registries and try to figure out what they do! After about an hour we managed to write a Python program

that maps keyboard presses to relevant registry edits. Time to do our first computer-controlled test flight!

Conquering the vertical plane

As a first attempt at autonomous aviation, we focused only on controlling the vertical axis. Vertical control was by far

the easiest: one of the propellers was situated at the bottom of the blimp and directed towards the floor along the vertical axis.

Using a PID controller, a widely established control mechanism that learns an optimal control function over its lifetime,

we managed to stabilize the blimp at a set height.

We quickly ran into some challenges. Soon it became clear the blimp responds to the slightest wind gust. Better not leave any doors open!

Also, the blimp seems to take satisfaction in annoying some of our colleagues.

In the end we figured out a nice docking station.

Masters of the sky

Having found a basic mechanism to keep our blimp airborne, it was time to make it travel somewhere. The blimp features

two parallel rear propellers. This means that flying into a set direction is a two-step process: first orient the blimp,

next hit the gas. However, the orientation of the blimp could not easily be detected from the camera: a balloon looks

roughly the same from any viewpoint! Without knowing the orientation there is no way to determine if the blimp faces in the desired direction nor close the gap.

Our first attempt involved one of the greatest, universal tools of office organisation: colored stickies. Putting a red

sticky on the front and a blue one on the back of the blimp, we should be able to distinguish between the two. This would

tell us whether our blimp was oriented towards or away from the camera. In practice, this didn’t work out: one sticky is

not sufficient to distinguish a color – more stickies turn the blimp into a brick. Other methods such as colored balloons

destabilized the blimp or confused the model.

Finally, we came up with an easier task: flying circles. The trick is to apply some throttle moving in the starting

orientation. This generates feedback that informs the controller how the balloon should be reoriented. In the end this

worked pretty well!

Nature’s iron fist

All good things come to an end, and our blimp’s end was very clear when it arrived: after an impressive number of crashes the vertical

propeller of the blimp broke down. A natural death, a worthy end, in name of science.

Did our deep learning blimp inspire you?

Go build on of your own with the knowledge you acquire during our three-day Deep Learning course!